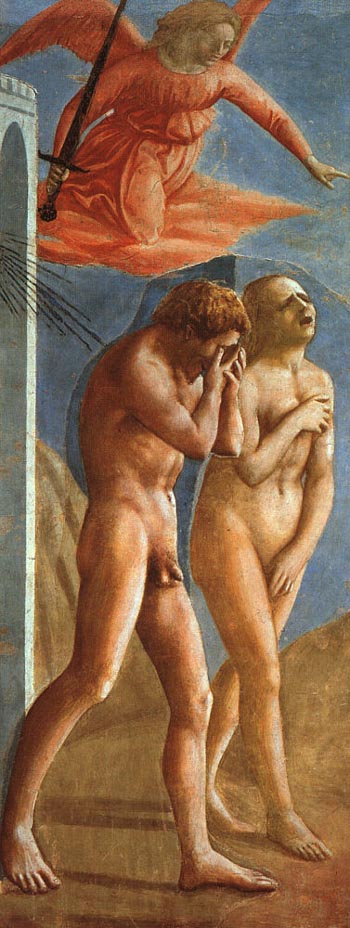

"Adam and Eve Expelled from Paradise"

Masaccio - 1427

David Seaton's News Links

Here in this article is probably the most revolutionary discovery of our age...(no kidding) with the most far reaching political consequences imaginable. There is now scientific proof that Jean-Jacques Rousseau was right in theorizing that human beings are naturally good and only deformed by society. The National Institutes of Health's studies, quoted below, do nothing less than prove that the doctrine of Original Sin does not fit observable data. Altruism is demonstrably "hard wired" into our brains. Since, aside from greed, the the perfectibility of humanity here on earth or its innate depravity is the issue that fundamentally divides the left from the right and defines their world views, you could call the studies cited in this Washington Post article, "game, set and match": the end of the "conservative revolution".

Although it tears holes in the intricate lacework of Christian

theology this new view of human ethics and morality may reawaken much of the world spiritually. The logic of Christian theology depends on the existence of a God that created this evil creature (the human being) to "love" him and in order for this befouled, yet somehow adorable creature, to be saved from its evil ways, it would require that God engender a human son and then sacrifice this son cruelly - sacrifice him to himself that is.

Certainly this new view of human nature is easier to fit God into than the one I have just outlined. In this new view God has created a creature with all that is needed to survive and prosper - the way a good father should - and then left his children to work out the "how" they do it, also as a good father should. DS

Here in this article is probably the most revolutionary discovery of our age...(no kidding) with the most far reaching political consequences imaginable. There is now scientific proof that Jean-Jacques Rousseau was right in theorizing that human beings are naturally good and only deformed by society. The National Institutes of Health's studies, quoted below, do nothing less than prove that the doctrine of Original Sin does not fit observable data. Altruism is demonstrably "hard wired" into our brains. Since, aside from greed, the the perfectibility of humanity here on earth or its innate depravity is the issue that fundamentally divides the left from the right and defines their world views, you could call the studies cited in this Washington Post article, "game, set and match": the end of the "conservative revolution".

Although it tears holes in the intricate lacework of Christian

theology this new view of human ethics and morality may reawaken much of the world spiritually. The logic of Christian theology depends on the existence of a God that created this evil creature (the human being) to "love" him and in order for this befouled, yet somehow adorable creature, to be saved from its evil ways, it would require that God engender a human son and then sacrifice this son cruelly - sacrifice him to himself that is.

Certainly this new view of human nature is easier to fit God into than the one I have just outlined. In this new view God has created a creature with all that is needed to survive and prosper - the way a good father should - and then left his children to work out the "how" they do it, also as a good father should. DS

If It Feels Good to Be Good, It Might Be Only Natural - Washington Post

By Shankar Vedantam

May 28, 2007; A01

The e-mail came from the next room.

"You gotta see this!" Jorge Moll had written. Moll and Jordan Grafman, neuroscientists at the National Institutes of Health, had been scanning the brains of volunteers as they were asked to think about a scenario involving either donating a sum of money to charity or keeping it for themselves.

As Grafman read the e-mail, Moll came bursting in. The scientists stared at each other. Grafman was thinking, "Whoa -- wait a minute!"

The results were showing that when the volunteers placed the interests of others before their own, the generosity activated a primitive part of the brain that usually lights up in response to food or sex. Altruism, the experiment suggested, was not a superior moral faculty that suppresses basic selfish urges but rather was basic to the brain, hard-wired and pleasurable.

Their 2006 finding that unselfishness can feel good lends scientific support to the admonitions of spiritual leaders such as Saint Francis of Assisi, who said, "For it is in giving that we receive." But it is also a dramatic example of the way neuroscience has begun to elbow its way into discussions about morality and has opened up a new window on what it means to be good.

Grafman and others are using brain imaging and psychological experiments to study whether the brain has a built-in moral compass. The results -- many of them published just in recent months -- are showing, unexpectedly, that many aspects of morality appear to be hard-wired in the brain, most likely the result of evolutionary processes that began in other species.

No one can say whether giraffes and lions experience moral qualms in the same way people do because no one has been inside a giraffe's head, but it is known that animals can sacrifice their own interests: One experiment found that if each time a rat is given food, its neighbor receives an electric shock, the first rat will eventually forgo eating.

What the new research is showing is that morality has biological roots -- such as the reward center in the brain that lit up in Grafman's experiment -- that have been around for a very long time.

The more researchers learn, the more it appears that the foundation of morality is empathy. Being able to recognize -- even experience vicariously -- what another creature is going through was an important leap in the evolution of social behavior. And it is only a short step from this awareness to many human notions of right and wrong, says Jean Decety, a neuroscientist at the University of Chicago.

The research enterprise has been viewed with interest by philosophers and theologians, but already some worry that it raises troubling questions. Reducing morality and immorality to brain chemistry -- rather than free will -- might diminish the importance of personal responsibility. Even more important, some wonder whether the very idea of morality is somehow degraded if it turns out to be just another evolutionary tool that nature uses to help species survive and propagate.

Moral decisions can often feel like abstract intellectual challenges, but a number of experiments such as the one by Grafman have shown that emotions are central to moral thinking. In another experiment published in March, University of Southern California neuroscientist Antonio R. Damasio and his colleagues showed that patients with damage to an area of the brain known as the ventromedial prefrontal cortex lack the ability to feel their way to moral answers.

When confronted with moral dilemmas, the brain-damaged patients coldly came up with "end-justifies-the-means" answers. Damasio said the point was not that they reached immoral conclusions, but that when confronted by a difficult issue -- such as whether to shoot down a passenger plane hijacked by terrorists before it hits a major city -- these patients appear to reach decisions without the anguish that afflicts those with normally functioning brains.

Such experiments have two important implications. One is that morality is not merely about the decisions people reach but also about the process by which they get there. Another implication, said Adrian Raine, a clinical neuroscientist at the University of Southern California, is that society may have to rethink how it judges immoral people.

Psychopaths often feel no empathy or remorse. Without that awareness, people relying exclusively on reasoning seem to find it harder to sort their way through moral thickets. Does that mean they should be held to different standards of accountability?

"Eventually, you are bound to get into areas that for thousands of years we have preferred to keep mystical," said Grafman, the chief cognitive neuroscientist at the National Institute of Neurological Disorders and Stroke. "Some of the questions that are important are not just of intellectual interest, but challenging and frightening to the ways we ground our lives. We need to step very carefully."

Joshua D. Greene, a Harvard neuroscientist and philosopher, said multiple experiments suggest that morality arises from basic brain activities. Morality, he said, is not a brain function elevated above our baser impulses. Greene said it is not "handed down" by philosophers and clergy, but "handed up," an outgrowth of the brain's basic propensities.

Moral decision-making often involves competing brain networks vying for supremacy, he said. Simple moral decisions -- is killing a child right or wrong? -- are simple because they activate a straightforward brain response. Difficult moral decisions, by contrast, activate multiple brain regions that conflict with one another, he said.

In one 2004 brain-imaging experiment, Greene asked volunteers to imagine that they were hiding in a cellar of a village as enemy soldiers came looking to kill all the inhabitants. If a baby was crying in the cellar, Greene asked, was it right to smother the child to keep the soldiers from discovering the cellar and killing everyone?

The reason people are slow to answer such an awful question, the study indicated, is that emotion-linked circuits automatically signaling that killing a baby is wrong clash with areas of the brain that involve cooler aspects of cognition. One brain region activated when people process such difficult choices is the inferior parietal lobe, which has been shown to be active in more impersonal decision-making. This part of the brain, in essence, was "arguing" with brain networks that reacted with visceral horror.

Such studies point to a pattern, Greene said, showing "competing forces that may have come online at different points in our evolutionary history. A basic emotional response is probably much older than the ability to evaluate costs and benefits."

While one implication of such findings is that people with certain kinds of brain damage may do bad things they cannot be held responsible for, the new research could also expand the boundaries of moral responsibility. Neuroscience research, Greene said, is finally explaining a problem that has long troubled philosophers and moral teachers: Why is it that people who are willing to help someone in front of them will ignore abstract pleas for help from those who are distant, such as a request for a charitable contribution that could save the life of a child overseas?

"We evolved in a world where people in trouble right in front of you existed, so our emotions were tuned to them, whereas we didn't face the other kind of situation," Greene said. "It is comforting to think your moral intuitions are reliable and you can trust them. But if my analysis is right, your intuitions are not trustworthy. Once you realize why you have the intuitions you have, it puts a burden on you" to think about morality differently.

Marc Hauser, another Harvard researcher, has used cleverly designed psychological experiments to study morality. He said his research has found that people all over the world process moral questions in the same way, suggesting that moral thinking is intrinsic to the human brain, rather than a product of culture. It may be useful to think about morality much like language, in that its basic features are hard-wired, Hauser said. Different cultures and religions build on that framework in much the way children in different cultures learn different languages using the same neural machinery.

Hauser said that if his theory is right, there should be aspects of morality that are automatic and unconscious -- just like language. People would reach moral conclusions in the same way they construct a sentence without having been trained in linguistics. Hauser said the idea could shed light on contradictions in common moral stances.

U.S. law, for example, distinguishes between a physician who removes a feeding tube from a terminally ill patient and a physician who administers a drug to kill the patient.

Hauser said the only difference is that the second scenario is more emotionally charged -- and therefore feels like a different moral problem, when it really is not: "In the end, the doctor's intent is to reduce suffering, and that is as true in active as in passive euthanasia, and either way the patient is dead."

May 28, 2007; A01

The e-mail came from the next room.

"You gotta see this!" Jorge Moll had written. Moll and Jordan Grafman, neuroscientists at the National Institutes of Health, had been scanning the brains of volunteers as they were asked to think about a scenario involving either donating a sum of money to charity or keeping it for themselves.

As Grafman read the e-mail, Moll came bursting in. The scientists stared at each other. Grafman was thinking, "Whoa -- wait a minute!"

The results were showing that when the volunteers placed the interests of others before their own, the generosity activated a primitive part of the brain that usually lights up in response to food or sex. Altruism, the experiment suggested, was not a superior moral faculty that suppresses basic selfish urges but rather was basic to the brain, hard-wired and pleasurable.

Their 2006 finding that unselfishness can feel good lends scientific support to the admonitions of spiritual leaders such as Saint Francis of Assisi, who said, "For it is in giving that we receive." But it is also a dramatic example of the way neuroscience has begun to elbow its way into discussions about morality and has opened up a new window on what it means to be good.

Grafman and others are using brain imaging and psychological experiments to study whether the brain has a built-in moral compass. The results -- many of them published just in recent months -- are showing, unexpectedly, that many aspects of morality appear to be hard-wired in the brain, most likely the result of evolutionary processes that began in other species.

No one can say whether giraffes and lions experience moral qualms in the same way people do because no one has been inside a giraffe's head, but it is known that animals can sacrifice their own interests: One experiment found that if each time a rat is given food, its neighbor receives an electric shock, the first rat will eventually forgo eating.

What the new research is showing is that morality has biological roots -- such as the reward center in the brain that lit up in Grafman's experiment -- that have been around for a very long time.

The more researchers learn, the more it appears that the foundation of morality is empathy. Being able to recognize -- even experience vicariously -- what another creature is going through was an important leap in the evolution of social behavior. And it is only a short step from this awareness to many human notions of right and wrong, says Jean Decety, a neuroscientist at the University of Chicago.

The research enterprise has been viewed with interest by philosophers and theologians, but already some worry that it raises troubling questions. Reducing morality and immorality to brain chemistry -- rather than free will -- might diminish the importance of personal responsibility. Even more important, some wonder whether the very idea of morality is somehow degraded if it turns out to be just another evolutionary tool that nature uses to help species survive and propagate.

Moral decisions can often feel like abstract intellectual challenges, but a number of experiments such as the one by Grafman have shown that emotions are central to moral thinking. In another experiment published in March, University of Southern California neuroscientist Antonio R. Damasio and his colleagues showed that patients with damage to an area of the brain known as the ventromedial prefrontal cortex lack the ability to feel their way to moral answers.

When confronted with moral dilemmas, the brain-damaged patients coldly came up with "end-justifies-the-means" answers. Damasio said the point was not that they reached immoral conclusions, but that when confronted by a difficult issue -- such as whether to shoot down a passenger plane hijacked by terrorists before it hits a major city -- these patients appear to reach decisions without the anguish that afflicts those with normally functioning brains.

Such experiments have two important implications. One is that morality is not merely about the decisions people reach but also about the process by which they get there. Another implication, said Adrian Raine, a clinical neuroscientist at the University of Southern California, is that society may have to rethink how it judges immoral people.

Psychopaths often feel no empathy or remorse. Without that awareness, people relying exclusively on reasoning seem to find it harder to sort their way through moral thickets. Does that mean they should be held to different standards of accountability?

"Eventually, you are bound to get into areas that for thousands of years we have preferred to keep mystical," said Grafman, the chief cognitive neuroscientist at the National Institute of Neurological Disorders and Stroke. "Some of the questions that are important are not just of intellectual interest, but challenging and frightening to the ways we ground our lives. We need to step very carefully."

Joshua D. Greene, a Harvard neuroscientist and philosopher, said multiple experiments suggest that morality arises from basic brain activities. Morality, he said, is not a brain function elevated above our baser impulses. Greene said it is not "handed down" by philosophers and clergy, but "handed up," an outgrowth of the brain's basic propensities.

Moral decision-making often involves competing brain networks vying for supremacy, he said. Simple moral decisions -- is killing a child right or wrong? -- are simple because they activate a straightforward brain response. Difficult moral decisions, by contrast, activate multiple brain regions that conflict with one another, he said.

In one 2004 brain-imaging experiment, Greene asked volunteers to imagine that they were hiding in a cellar of a village as enemy soldiers came looking to kill all the inhabitants. If a baby was crying in the cellar, Greene asked, was it right to smother the child to keep the soldiers from discovering the cellar and killing everyone?

The reason people are slow to answer such an awful question, the study indicated, is that emotion-linked circuits automatically signaling that killing a baby is wrong clash with areas of the brain that involve cooler aspects of cognition. One brain region activated when people process such difficult choices is the inferior parietal lobe, which has been shown to be active in more impersonal decision-making. This part of the brain, in essence, was "arguing" with brain networks that reacted with visceral horror.

Such studies point to a pattern, Greene said, showing "competing forces that may have come online at different points in our evolutionary history. A basic emotional response is probably much older than the ability to evaluate costs and benefits."

While one implication of such findings is that people with certain kinds of brain damage may do bad things they cannot be held responsible for, the new research could also expand the boundaries of moral responsibility. Neuroscience research, Greene said, is finally explaining a problem that has long troubled philosophers and moral teachers: Why is it that people who are willing to help someone in front of them will ignore abstract pleas for help from those who are distant, such as a request for a charitable contribution that could save the life of a child overseas?

"We evolved in a world where people in trouble right in front of you existed, so our emotions were tuned to them, whereas we didn't face the other kind of situation," Greene said. "It is comforting to think your moral intuitions are reliable and you can trust them. But if my analysis is right, your intuitions are not trustworthy. Once you realize why you have the intuitions you have, it puts a burden on you" to think about morality differently.

Marc Hauser, another Harvard researcher, has used cleverly designed psychological experiments to study morality. He said his research has found that people all over the world process moral questions in the same way, suggesting that moral thinking is intrinsic to the human brain, rather than a product of culture. It may be useful to think about morality much like language, in that its basic features are hard-wired, Hauser said. Different cultures and religions build on that framework in much the way children in different cultures learn different languages using the same neural machinery.

Hauser said that if his theory is right, there should be aspects of morality that are automatic and unconscious -- just like language. People would reach moral conclusions in the same way they construct a sentence without having been trained in linguistics. Hauser said the idea could shed light on contradictions in common moral stances.

U.S. law, for example, distinguishes between a physician who removes a feeding tube from a terminally ill patient and a physician who administers a drug to kill the patient.

Hauser said the only difference is that the second scenario is more emotionally charged -- and therefore feels like a different moral problem, when it really is not: "In the end, the doctor's intent is to reduce suffering, and that is as true in active as in passive euthanasia, and either way the patient is dead."

4 comments:

Don't get too excited. We are hard-wired for both selfishness AND altruism. Both serve necessary functions at different times and in different circumstances.

The defining idea of the left has always been that human beings are intrinsiclly good and that the stuctures of society have created a web of interests that make it impossible to for men and women to realize their authentic nature. The goodnes is natural and the selfishness is artificial.

For the left this idea is like the Holy Trinity for a Catholic, you don't have to believe it, but if you don't believe it you don't really belong to the club.

This research is like if the Holy Trinity appeared on the balcony of St. Peter's square and introduced themselves to the crowd. It would have an electrifying effect on the faithful.

I think a more appropriate and valid reference would not be to Rousseau--that lover of humanity who abandoned his five children to almost certain death in the orphanage--but to Adam Smith's Theory of Moral Sentiments.

Rousseau has more fans on the left than Smith. I suppose because he was more radical in blaming the social structure instead of human nature... And of course most of what we know about Rousseau - good and bad - we know because he told us.

The "social contract" and the "noble savage" we owe to him. What would Henry David Thoreau have been without Rousseau? And of course, most great men make lousy fathers.

Post a Comment